New research reveals that organisations are suffering from what is being coined the ‘data gap’. This has been found to be the major contributor to inaccurate data which has a negative impact on the bottom line.

The study by Quantexa which surveyed nearly 350 technology and data decision-makers across key sectors including financial services, insurance and the public sector in the UK, France, Netherlands and Spain, found that data and datatech is being let down by the managers in charge of it.

2022 has already been named the year of data transformation; hot off the coat tails of the pandemic induced digital transformation seen over the past 24 months. The pandemic has accelerated every organisation’s digitisation plans irrespective of whether you are a global law firm with offices in every major city, or an independent café in Milton Keynes. In fact, research shows that within the first two months of lockdown five years was wiped off the digital adoption timeline!

By its very nature, with digitisation comes data – and lots of it. It is estimated by McKinsey that 64.2 zettabytes of data were created last year, almost double that created just two years earlier. To put this in perspective this equates to an extra trillion movies worth of data being created in this short space of time, or 1 million movies every minute!

It’s all well and good to have all this data, but it will only ever be beneficial for a business if it is well managed. More data does not equal better data. If anything, more data compounds the issue of inaccurate data, as there is more of it to check and clean. It is therefore not surprising to discover that according to Anaconda data scientists spend about 45 per cent of their time on data preparation tasks, including cleaning data. Data cleansing accounts for more than a quarter of average day for data scientists and 85 per cent of data scientists say that it is the worst bit of their job. The most important, and most rewarding, part of their job – the modelling – consumes just 11 per cent of their time.

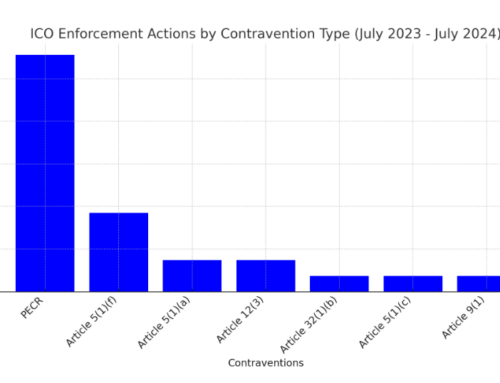

In addition, the study by Quantexa revealed that more than two-fifths (43 per cent) of data managers believe that inaccurate data can also trigger regulatory scrutiny and compliance issues, with a similar proportion (42 per cent), citing customer experience opportunities and retention problems, and the same amount again (42 per cent) believing that it is drains resource due to increased manual data workload.

Being unable to derive strategic advantage from data because of inaccuracies – whether for insight and decision making or for customer communications is concerning, particularly since over 90 per cent of business leaders believe that analytics and data driven marketing will increase in importance.

The solution?

Sadly, there is no silver bullet. But there are cost effective options available to enhance the accuracy of data including correcting spellings, removing multiple records, identifying people that have died or moved house, for example. All of which can be done at scale and at speed in a secure environment. Getting the basics right not only reduces inaccuracies that are compounded further up the chain, but also contributes to compliance to regulations such as AML, KYC and GDPR. The data gap is a significant problem for businesses, but it can at least be reduced with fundamental data cleansing implemented from the start.

For more information about our solutions that minimise the data gap including Cygnus our desktop-based solution or SwiftSuite, our cloud-based portfolio please get in touch with Ben Warren ben.warren@thesoftwarebureau.com. We’ve recently upgraded our data processing power within SwiftSuite which enables batch processing in minutes, saving time, money and resource.