Why data hygiene must become a priority post covid

According to Fortune Business Insights, the global machine learning and analytics market is expected to grow from $21.17 billion in 2022 to $209.91 billion by 2029, at a CAGR of 38.8 percent.

Clearly it is big business, and the global impact of the Covid-19 pandemic has served to accelerate demand. Increasingly organisations from all sectors are turning to computers to make decisions from insurers calculating a customer’s risk profile through to airlines looking to work out how much food and drink to carry onboard. Every industry without exception can apply analytics to its data to make faster, more informed decisions.

But, and it is a big BUT, analytics and machine learning can only ever be as strong as the data that fuels them. This means that if the data is out of date, or worse, inaccurate, algorithms will be biased and flawed decisions will be made.

Why is this important?

It’s important because organisations have a responsibility to their customers. Under GDPR companies are required to know the provenance of the data they hold and process. Furthermore, consumers have the right to know exactly what their personal information is being used for, and why decisions have been made because of their data. This has led to a requirement called ‘explainability’. This is the ability for a business to be able to explain in human terms what is going on within the internal mechanics of a machine learning system that they use. Given that customer data is a primary source of information for the algorithms constructed using machine learning, organisations consequently have a legal responsibility to understand these models.

There are scores of famous AI failure stories:

- Groupon’s location-based deals which in the UK market ended up being far too widespread to be relevant

- Microsoft launched a deeply racist and sexist chatbot

- A Google algorithm had the unsavoury tendency to classify black people as gorillas

- Amazon got into hot water by finding its advanced AI hiring software heavily favoured men for technical positions

- Facebook accidentally got a Palestinian man arrested in Israel by mistranslating a caption he had posted on a photo of himself. The man underwent police questioning for several hours until the mistake came to light.

Groupon did not want to send poorly targeted deals, Microsoft didn’t design a misogynist chatbot, Google didn’t mean to be racist, Facebook didn’t intend to get users arrested and Amazon didn’t deliberately try to discriminate against women, but this is a result of what is known as “algorithmic bias” and it is becoming an increasingly common issue for data scientists. It is estimated that within five years there will be more biased algorithms than there are clean ones.

Data scientists are one of the most sought after (and well paid) professionals in today’s job markets. However, research shows that data scientists spend 60 percent of their time cleaning and preparing data for analysis and 57 percent of data scientists view this as the least enjoyable part of their job. The ugly truth is that data science isn’t all glamour. Most of the time is spent preparing data and dealing with quality issues including:

- Noisy data: Data that contains a large amount of conflicting or misleading information.

- Dirty data:Data that contains missing values, categorical and character features with many levels, and inconsistent and erroneous values.

- Sparse data:Data that contains very few actual values and is instead composed of mostly zeros or missing values.

- Inadequate data: Data that is either incomplete or biased.

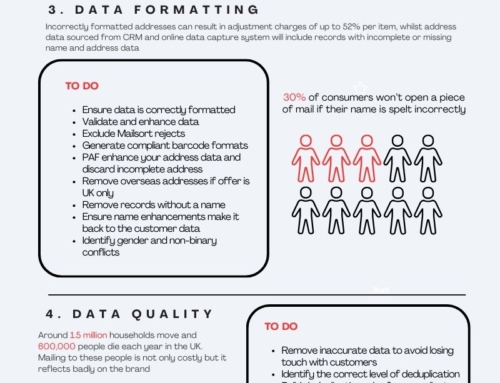

This is where data hygiene comes in. By following a regular data cleansing regime an organisation can ensure that at the basest level their customer data is as accurate as possible which is a firm basis for explainability. For instance, by ensuring that deceased customers, who are classified as a vulnerable group, are identified and removed or ensuring that the personal data for people that have moved house is updated it is possible to reduce the risk of bias and create a better foundation for marketing analytics and CRM initiatives.

Not only that, but it reduces the time spent on data maintenance so that more time can be devoted to building more predictive models and better customer relationship driven communications that boost business outcomes. A win-win for everyone!

If you need advice on how we can help keep your data accurate and up to date to minimise algorithmic bias please get in touch!